],

],version history

|

Stanford Encyclopedia of PhilosophyA | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z

|

last substantive content

change

|

Bell's Theorem is the collective name for a family of results, all showing the impossibility of a Local Realistic interpretation of quantum mechanics. There are variants of the Theorem with different meanings of ‚ÄúLocal Realistic.‚ÄĚ In John S. Bell's pioneering paper of 1964 the realism consisted in postulating in addition to the quantum state a ‚Äúcomplete state‚ÄĚ, which determines the results of measurements on the system, either by assigning a value to the measured quantity that is revealed by the measurement regardless of the details of the measurement procedure, or by enabling the system to elicit a definite response whenever it is measured, but a response which may depend on the macroscopic features of the experimental arrangement or even on the complete state of the system together with that arrangement. Locality is a condition on composite systems with spatially separated constituents, requiring an operator which is the product of operators associated with the individual constituents to be assigned a value which is the product of the values assigned to the factors, and requiring the value assigned to an operator associated with an individual constitutent to be independent of what is measured on any other constitutent. From his assumptions Bell proved an inequality (the prototype of ‚ÄúBell's Inequality‚ÄĚ) which is violated by the Quantum Mechanical predictions made from an entangled state of the composite system. In other variants the complete state assigns probabilities to the possible results of measurements of the operators rather than determining which result will be obtained, and nevertheless inequalities are derivable; and still other variants dispense with inequalities. The incompatibility of Local Realistic Theories with Quantum Mechanics permits adjudication by experiments, some of which are described here. Most of the dozens of experiments performed so far have favored Quantum Mechanics, but not decisively because of the ‚Äúdetection loophole‚ÄĚ or the ‚Äúcommunication loophole.‚ÄĚ The latter has been nearly decisively blocked by a recent experiment and there is a good prospect for blocking the former. The refutation of the family of Local Realistic Theories would imply that certain peculiarities of Quantum Mechanics will remain part of our physical worldview: notably, the objective indefiniteness of properties, the indeterminacy of measurement results, and the tension between quantum nonlocality and the locality of Relativity Theory.

In 1964 John S. Bell, a native of Northern Ireland and a staff member of CERN (European Organisation for Nuclear Research) whose primary research concerned theoretical high energy physics, published a paper in the short-lived journal Physics which transformed the study of the foundation of Quantum Mechanics (Bell 1964). The paper showed (under conditions which were relaxed in later work by Bell (1971, 1985, 1987) himself and by his followers (Clauser et al. 1969, Clauser and Horne 1974, Mermin 1986, Aspect 1983)) that no physical theory which is realistic and also local in a specified sense can agree with all of the statistical implications of Quantum Mechanics. Many different versions and cases, with family resemblances, were inspired by the 1964 paper and are subsumed under the italicized statement, ‚ÄúBell's Theorem‚ÄĚ being the collective name for the entire family.

One line of investigation in the prehistory of Bell's Theorem concerned the conjecture that the Quantum Mechanical state of a system needs to be supplemented by further ‚Äúelements of reality‚ÄĚ or ‚Äúhidden variables‚ÄĚ or ‚Äúcomplete states‚ÄĚ in order to provide a complete description, the incompleteness of the quantum state being the explanation for the statistical character of Quantum Mechanical predictions concerning the system. There are actually two main classes of hidden-variables theories. In one, which is usually called ‚Äúnon-contextual‚ÄĚ, the complete state of the system determines the value of a quantity (equivalently, an eigenvalue of the operator representing that quantity) that will be obtained by any standard measuring procedure of that quantity, regardless of what other quantities are simultaneously measured or what the complete state of the system and the measuring apparatus may be. The hidden-variables theories of Kochen and Specker (1967) are explicitly of this type. In the other, which is usually called ‚Äúcontextual‚ÄĚ, the value obtained depends upon what quantities are simultaneously measured and/or on the details of the complete state of the measuring apparatus. This distinction was first explicitly pointed out by Bell (1966) but without using the terms ‚Äúcontextual‚ÄĚ and ‚Äúnon-contextual‚ÄĚ. There actually are two quite different versions of contextual hidden-variables theories, depending upon the character of the context: an ‚Äúalgebraic context‚ÄĚ is one which specifies the quantities (or the operators representing them) which are measured jointly with the quantity (or operator) of primary interest, whereas an ‚Äúenvironmental context‚ÄĚ is a specification of the physical characteristics of the measuring apparatus whereby it simultaneously measures several distinct co-measurable quantities. In Bohm's hidden-variables theory (1952) the context is environmental, whereas in those of Bell (1966) and Gudder (1970) the context is algebraic. A pioneering version of a ‚Äúhidden variables theory‚ÄĚ was proposed by Louis de Broglie in 1926-7 (de Broglie 1927, 1928) and a more complete version by David Bohm in 1952 (Bohm 1952; see also the entry on Bohmian mechanics). In these theories the entity supplementing the quantum state (which is a wave function in the position representation) is typically a classical entity, located in a classical phase space and therefore characterized by both position and momentum variables. The classical dynamics of this entity is modified by a contribution from the wave function

(1) Ōą(x, t‚ÄČ) = R(x, t‚ÄČ)exp[iS(x, t‚ÄČ)/],

whose temporal evolution is governed by the Schr√∂dinger Equation. Both de Broglie and Bohm assert that the velocity of the particle satisfies the ‚Äúguidance equation‚ÄĚ

(2) v = grad(S/m),

whereby the wave function Ōą acts upon the particles as a ‚Äúguiding wave‚ÄĚ.

De Broglie (1928) and the school of ‚ÄúBohmian mechanics‚ÄĚ (notably D√1/4rr, Goldstein, and Zangh√¨ (1992)) postulate the guidance equation without an attempt to derive it from a more fundamental principle. Bohm (1952), however, proposes a deeper justification of the guidance equation. He postulates a modified version of Newton's second law of motion:

(3) m d¬≤x/dt‚ÄȬ≤ = ‚ą'grad[V(x,t‚ÄČ) + U(x,t‚ÄČ)],

where V(x, t‚ÄČ) is the standard classical potential and U(x, t‚ÄČ) is a new entity, the ‚Äúquantum potential‚ÄĚ,

(4) U(x,t‚ÄČ) = ‚ą'(¬≤/2m) grad¬≤R(x,t‚ÄČ)/R(x, t‚ÄČ),

and he proves that if the guidance equation holds at an initial time t0, then it follows from Eqs (2), (3), (4) and the time dependent Schr√∂dinger Equation that it holds for all time. Although Bohm deserves credit for attempting to justify the guidance equation, there is in fact tension between that equation and the modified Newtonian equation (3), which has been analyzed by Baublitz and Shimony (1996). Eq. (3) is a second order differential equation in time and does not determine a definite solution for all t without two initial conditions ‚Ä" x and v at t0.

Since v at t0 is a contingency, the validity of the guidance equation at t0 (and hence at all other times) is contingent. Bohm recognizes this gap in his theory and discusses possible solutions (Bohm 1952, p. 179), without reaching a definite proposal. If, however, this difficulty is set aside, a solution is provided to the measurement problem of standard quantum mechanics, i.e., the problem of accounting for the occurrence of a definite outcome when the system of interest is prepared in a superposition of eigenstates of the operator which is subjected to measurement. Furthermore, the guidance equation ensures agreement with the statistical predictions of standard quantum mechanics. The hidden variables model using the guidance equation inspired Bell to take seriously the hidden variables interpretation of Quantum Mechanics, and the nonlocality of this model suggested his theorem.

Another approach to the hidden variables conjecture has been to investigate the consistency of the algebraic structure of the physical quantities characterized by Quantum Mechanics with a hidden variables interpretation. Standard Quantum Mechanics assumes that the ‚Äúpropositions‚ÄĚ concerning a physical system are isomorphic to the lattice L(H) of closed linear subspaces of a Hilbert space H (equivalently, to the lattice of projection operators on H) with the following conditions: (1) the proposition whose truth value is necessarily ‚Äėtrue‚Ä(tm) is matched with the entire space H; (2) the proposition whose truth value is necessarily ‚Äėfalse‚Ä(tm) is matched with the empty subspace 0; (3) if a subspace S is matched with a proposition q, then the orthogonal complement of S is matched with the negation of q; (4) the proposition q, whose truth value is ‚Äėtrue‚Ä(tm) if the truth-value of either q1 or q2 is ‚Äėtrue‚Ä(tm) and is ‚Äėfalse‚Ä(tm) if the answers to both q1 and q2 are ‚Äėfalse‚Ä(tm), is matched with the closure of the set theoretical union of the spaces S1 and S2 respectively matched to q1 and q2 , the closure being the set of all vectors which can be expressed as the sum of a vector in S1 and a vector in S2. It should be emphasized that this matching does not presuppose that a proposition is necessarily either true or false and hence is compatible with the quantum mechanical indefiniteness of a truth value, which in turn underlies the feature of quantum mechanics that a physical quantity may be indefinite in value. The type of hidden variables interpretation which has been most extensively treated in the literature (often called a ‚Äúnon-contextual hidden variables interpretation‚ÄĚ for a reason which will soon be apparent) is a mapping m of the lattice L into the pair {1,0}, where m(S)=1 intuitively means that the proposition matched with S is true and m(S)=0 means intuitively that the proposition matched with S is false. A mathematical question of importance is whether there exist such mappings for which these intuitive interpretations are maintained and conditions (1) ‚Ä" (4) are satisfied. A negative answer to this question for all L(H) where the Hilbert space H has dimensionality greater than 2 is implied by a deep theorem of Gleason (1957) (which does more, by providing a complete catalogue of possible probability functions on L(H)). The same negative answer is provided much more simply by John Bell (1966) (but without the complete catalogue of probability functions achieved by Gleason), who also provides a positive answer to the question in the case of dimensionality 2 ; and independently these results were also achieved by Kochen and Specker (1967). It should be added that in the case of dimensionality 2 the statistical predictions of any quantum state can be recovered by an appropriate mixture of the mappings m. (See also the entry on the Kochen-Specker theorem.)

In Bell (1966), after presenting a strong case against the hidden variables program (except for the special case of dimensionality 2) Bell performs a dramatic reversal by introducing a new type of hidden variables interpretation ‚Ä" one in which the truth value which m assigns to a subspace S depends upon the context C of propositions measured in tandem with the one associated with S. In the new type of hidden variables interpretation the truth value into which m maps S depends upon the context C. These interpretations are commonly referred to as ‚Äúcontextual hidden variables interpretations‚ÄĚ, whereas those in which there is no dependence upon the context are called ‚Äúnon-contextual.‚ÄĚ Bell proves the consistency of contextual hidden variables interpretations with the algebraic structure of the lattice L(H) for two examples of H with dimension greater than 2. (His proposal has been systematized by Gudder (1970), who takes a context C to be a maximal Boolean subalgebra of the lattice L(H) of subspaces.)

Another line leading to Bell's Theorem was the investigation of Quantum Mechanically entangled states, that is, quantum states of a composite system that cannot be expressed as direct products of quantum states of the individual components. That Quantum Mechanics admits of such entangled states was discovered by Erwin Schr√∂dinger (1926) in one of his pioneering papers, but the significance of this discovery was not emphasized until the paper of Einstein, Podolsky, and Rosen (1935). They examined correlations between the positions and the linear momenta of two well separated spinless particles and concluded that in order to avoid an appeal to nonlocality these correlations could only be explained by ‚Äúelements of physical reality‚ÄĚ in each particle ‚Ä" specifically, both definite position and definite momentum ‚Ä" and since this description is richer than permitted by the uncertainty principle of Quantum Mechanics their conclusion is effectively an argument for a hidden variables interpretation. (It should be emphasized that their argument does not depend upon counter-factual reasoning, that is reasoning about what would be observed if a quantity were measured other than the one that was in fact measured; instead their argument can be reformulated entirely in the ordinary inductive logic, as emphasized by d'Espagnat (1976) and Shimony (2001). This reformulation is important because it diminishes the force of Bohr's (1935) rebuttal of Einstein, Podolsky, and Rosen on the grounds that one is not entitled to draw conclusions about the existence of elements of physical reality from considerations of what would be seen if a measurement other than the actual one had been performed. Bell was skeptical of Bohr's rebuttal for other reasons, essentially that he regarded it to be anthropocentric.[1] See also the entry on the Einstein-Podolsky-Rosen paradox.)

At the conclusion of Bell (1966), in which Bell gives a new lease on life to the hidden variables program by introducing the notion of contextual hidden variables, he performs another dramatic reversal by raising a question about a composite system consisting of two well separated particles 1 and 2: suppose a proposition associated with a subspace S1 of the Hilbert space of particle 1 is subjected to measurement, and a contextual hidden variables theory assigns the truth value m(S1/C) to this proposition. What physically reasonable conditions can be imposed upon the context C? Bell suggests that C should consist only of propositions concerning particle 1, for otherwise the outcome of the measurement upon 1 will depend upon what operations are performed upon a remote particle 2, and that would be non-locality. This condition raises the question: can the statistical predictions of Quantum Mechanics concerning the entangled state be duplicated by a contextual hidden variables theory in which the context C is localized? It is interesting to note that this question also arises from a consideration of the de Broglie-Bohm model: when Bohm derives the statistical predictions of a Quantum Mechanically entangled system whose constituents are well separated, the outcome of a measurement made on one constituent depends upon the action of the ‚Äúguiding wave‚ÄĚ upon constituents that are far off, which in general will depend on the measurement arrangement on that side. Bell was thus heuristically led to ask whether a lapse of locality is necessary for the recovery of Quantum Mechanical statistics.

Bell (1964) gives a pioneering proof of the theorem that bears his name, by first making explicit a conceptual framework within which expectation values can be calculated for the spin components of a pair of spin-half particles, then showing that, regardless of the choices that are made for certain unspecified functions that occur in the framework, the expectation values obey a certain inequality which has come to be called ‚ÄúBell's Inequality.‚ÄĚ That term is now commonly used to denote collectively a family of Inequalities derived in conceptual frameworks similar to but more general than the original one of Bell. Sometimes these Inequalities are referred to as ‚ÄúInequalities of Bell's type.‚ÄĚ Each of these conceptual frameworks incorporates some type of hidden variables theory and obeys a locality assumption. The name ‚ÄúLocal Realistic Theory‚ÄĚ is also appropriate and will be used throughout this article because of its generality. Bell calculates the expectation values for certain products of the form (ŌÉ1¬∑√Ę)(ŌÉ2¬∑√™), where ŌÉ1 is the vectorial Pauli spin operator for particle 1 and ŌÉ2 is the vectorial Pauli spin operator for particle 2 ( both particles having spin ¬1/2), and √Ę and √™ are unit vectors in three-space, and then shows that these Quantum Mechanical expectation values violate Bell's Inequality. This violation constitutes a special case of Bell's Theorem, stated in generic form in Section 1, for it shows that no Local Realistic Theory subsumed under the framework of Bell's 1964 paper can agree with all of the statistical predictions of quantum theory.

In the present section the pattern of Bell's 1964 paper will be followed: formulation of a framework, derivation of an Inequality, demonstration of a discrepancy between certain quantum mechanical expectation values and this Inequality. However, a more general conceptual framework than his will be assumed and a somewhat more general Inequality will be derived, thus yielding a more general theorem than the one derived by Bell in 1964, but with the same strategy and in the same spirit. Papers which took the steps from Bell's 1964 demonstration to the one given here are Clauser et al. (1969), Bell (1971), Clauser and Horne (1974), Aspect (1983) and Mermin (1986).[2] Other strategies for deriving Bell-type theorems will be mentioned in Section 6, but with less emphasis because they have, at least so far, been less important for experimental tests.

The conceptual framework in which a Bell-type Inequality will be demonstrated first of all postulates an ensemble of pairs of systems, the individual systems in each pair being labeled as 1 and 2. Each pair of systems is characterized by a ‚Äúcomplete state‚ÄĚ m which contains the entirety of the properties of the pair at the moment of generation. The complete state m may differ from pair to pair, but the mode of generation of the pairs establishes a probability distribution ŌĀ which is independent of the adventures of each of the two systems after they separate. Different experiments can be performed on each system, those on 1 designated by a, a‚Ä≤, etc. and those on 2 by b, b‚Ä≤, etc. One can in principle let a, a‚Ä≤, etc. also include characteristics of the apparatus used for the measurement, but since the dependence of the result upon the microscopic features of the apparatus is not determinable experimentally, only the macroscopic features of the apparatus (such as orientations of the polarization analyzers) in their incompletely controllable environment need be admitted in practice in the descriptions a, a‚Ä≤, etc. , and likewise for b, b‚Ä≤, etc. The remarkably good agreement ‚Ä" which will be presented in Section 3 ‚Ä" between the experimental measurements of correlations in Bell-type experiments and the quantum mechanical predictions of these correlations justifies restricting attention in practice to macroscopic features of the apparatus. The result of an experiment on 1 is labeled by s, which can take on any of a discrete set of real numbers in the interval [‚ą'1, 1]. Likewise the result of an experiment on 2 is labeled by t, which can take on any of a discrete set of real numbers in [‚ą'1, 1]. (Bell's own version of his theorem assumed that s and t are both bivalent, either ‚ą'1 or 1, but other ranges are assumed in other variants of the theorem.)

The following probabilities are assumed to be well defined:

(5) p1m (s‚ÄČ|a, b, t‚ÄČ) = the probability that the outcome of the measurement performed on 1 is s when m is the complete state, the measurements performed on 1 and 2 respectively are a and b, and the result of the experiment on 2 is t;

(6) p2m (t‚ÄČ|a, b, s‚ÄČ) = the probability that the outcome of the measurement performed on 2 is t when the complete state is m, the measurements performed on 1 and 2 are respectively a and b, and the result of the measurement a is s.

(7) pm (s, t‚ÄČ|a, b‚ÄČ) = the probability that the results of the joint measurements a and b, when the complete state is m, are respectively s and t.

The probability function p will be assumed to be non-negative and to sum to unity when the summation is taken over all allowed values of s and t. (Note that the hidden variables theories considered in Section 1 can be subsumed under this conceptual framework by restricting the values of the probability function p to 1 and 0, the former being identified with the truth value ‚Äútrue‚ÄĚ and the latter to the truth value ‚Äúfalse‚ÄĚ. )

A further feature of the conceptual framework is locality, which is understood as the conjunction of the following Independence Conditions:

Remote Outcome Independence (this name is a neologism, but an appropriate one, for what is commonly called outcome independence)

(8a) p1m (s‚ÄČ|a, b, t‚ÄČ) ‚Č° p1m (s‚ÄČ|a, b‚ÄČ) is independent of t,

(8b) p2m (t‚ÄČ|a, b, s‚ÄČ) ‚Č° p2m (t‚ÄČ|a, b‚ÄČ) is independent of s;

(Note that Eqs. (8a) and (8b) do not preclude correlations of the results of the experiment a on 1 and the experiment b on 2; they say rather that if the complete state m is given, the outcome s of the experiment on 1 provides no additional information regarding the outcome of the experiment on 2, and conversely.)

Remote Context Independence (this also is a neologism, but an appropriate one, for what is commonly called parameter independence):

(9a) p1m (s‚ÄČ|a, b‚ÄČ) ‚Č° p1m (s‚ÄČ| a‚ÄČ) is independent of b,

(9b) p 2m (t‚ÄČ|a, b‚ÄČ) ‚Č° p2m (t‚ÄČ| b‚ÄČ) is independent of a.

Jarrett (1984) and Bell (1990) demonstrated the equivalence of the conjunction of (8a,b) and (9a,b) to the factorization condition:

(10) pm (s, t‚ÄČ|a, b‚ÄČ) = p1m(s‚ÄČ|a‚ÄČ) p2m(t‚ÄČ|b‚ÄČ),

and likewise for (a, b‚Ä≤), (a‚Ä≤, b‚ÄČ) , and (a‚Ä≤,b‚Ä≤) substituted for (a, b‚ÄČ).

The factorizability condition Eq. (10) is also often referred to as Bell locality. It should be emphasized that at the present stage of exposition, however, Bell locality is merely a mathematical condition within a conceptual framework, to which no physical significance has been attached ‚Ä" in particular no connection to the locality of Special Relativity Theory, although such a connection will be made later when experimental applications of Bell's theorem will be discussed.

Bell's Inequality is derivable from his locality condition by means of a simple lemma:

(11) If q, q‚Ä≤, r , r‚Ä≤ all belong to the closed interval [‚ą'1,1], then S ‚Č° qr + qr‚Ä≤ + q‚Ä≤r ‚ą' q‚Ä≤r‚Ä≤ belongs to the closed interval [-2,2].

Proof: Since S is linear in all four variables q, q‚Ä≤, r, r ‚Ä≤ it must take on its maximum and minimum values at the corners of the domain of this quadruple of variables, that is, where each of q, q‚Ä≤, r, r‚Ä≤ is +1 or ‚ą'1. Hence at these corners S can only be an integer between -4 and +4. But S can be rewritten as (q + q‚Ä≤)(r + r‚Ä≤) ‚Ä" 2q‚Ä≤r‚Ä≤, and the two quantities in parentheses can only be 0, 2, or -2, while the last term can only be -2 or +2, so that S cannot equal +-3, +3, -4, or +4 at the corners. Q.E.D.

Now define the expectation value of the product st of outcomes:

(12) Em (a, b‚ÄČ) ‚Č° ő£s ő£t pm(s,t‚ÄČ|a, b‚ÄČ)(st‚ÄČ), the summation being taken over all the allowed values of s and t.

and likewise with (a, b‚Ä≤). (a‚Ä≤, b‚ÄČ). and (a‚Ä≤, b‚Ä≤) substituted for (a, b‚ÄČ). Also take the quantities q, q‚Ä≤, r, r‚Ä≤ of the above lemma (11) to be the single expectation values:

(13a) q = ő£s‚ÄČsp1 m(s|a),(13b) q‚Ä≤ = ő£s‚ÄČsp1 m(s|a‚Ä≤),

(13c) r = ő£t‚ÄČtp2 m(t‚ÄČ|b),

(13d) r‚Ä≤ = ő£t‚ÄČtp2 m(t‚ÄČ|b‚Ä≤).

Then the lemma, together with Eq. (12), factorization condition Eq. (10), and the bounds on s and t stated prior to Eq. (5), implies:

(14) -2 ‚ȧ Em(a,b‚ÄČ) + Em(a,b‚Ä≤) + Em(a‚Ä≤,b‚ÄČ) ‚ą' Em(a‚Ä≤,b‚Ä≤) ‚ȧ 2.

Finally, return to the fact that the ensemble of interest consists of pairs of systems, each of which is governed by a mapping m, but m is chosen stochastically from a space M of mappings governed by a standard probability function ŌĀ ‚Ä" that is, for every Borel subset B of M, ŌĀ(B‚ÄČ) is a non-negative real number, ŌĀ(M‚ÄČ) = 1, and ŌĀ(Uj Bj) = ő£j ŌĀ(Bj) where the Bj's are disjoint Borel subsets of M and Uj Bj is the set-theoretical union of the Bj's. If we define

(15a) pŌĀ(s,t |a,b‚ÄČ) ‚Č° ‚ą"M pm(s,t |a,b‚ÄČ) dŌĀ

(15b) EŌĀ(a,b‚ÄČ) ‚Č° ‚ą"M Em(a,b‚ÄČ)dŌĀ = ő£s ő£t ‚ą"M pm(s,t‚ÄČ|a, b‚ÄČ)(st‚ÄČ) dŌĀ

and likewise when (a, b‚Ä≤), (a‚Ä≤, b‚ÄČ), and (a‚Ä≤, b‚Ä≤) are substituted for (a, b‚ÄČ), then (14), (15a,b), and the properties of ŌĀ imply

(16) -2 ‚ȧ E ŌĀ(a,b‚ÄČ) + E ŌĀ(a,b‚Ä≤) + E ŌĀ(a‚Ä≤,b‚ÄČ) ‚ą' EŌĀ(a‚Ä≤,b‚Ä≤) ‚ȧ 2.

Ineq. (16) is an Inequality of Bell's type, henceforth called the ‚ÄúBell-Clauser-Horne-Shimony-Holt (BCHSH) Inequality.‚ÄĚ

The third step in the derivation of a theorem of Bell's type is to exhibit a system, a quantum mechanical state, and a set of quantities for which the statistical predictions violate Inequality (16). Let the system consist of a pair of photons 1 and 2 propagating respectively in the z and ‚ą'z directions. The two kets |x>j and |y>j constitute a polarization basis for photon j (j =1, 2), the former representing (in Dirac's notation) a state in which the photon 1 is linearly polarized in the x-direction and the latter a state in which it is linearly polarized in the y-direction. For the two-photon system the four product kets |x>1 |x>2, |x>1 |y>2, |y>1 |x>2, and |y>1 |y>2 constitute a polarization basis. Each two-photon polarization state can be expressed as a linear combination of these four basis states with complex coefficients. Of particular interest are the entangled quantum states, which in no way can be expressed as |ŌÜ>1|ő3/4>2, with |ŌÜ> and |ő3/4> single-photon states, an example being

(17) | ő¶ > = (1/‚ąö2)[ |x>1 |x>2 + |y>1 |y>2 ],

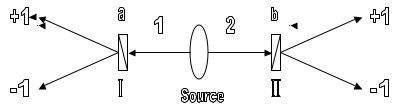

which has the useful property of being invariant under rotation of the x and y axes in the plane perpendicular to z. Neither photon 1 nor photon 2 is in a definite polarization state when the pair is in the state |Ōą>, but their potentialities (in the terminology of Heisenberg 1958) are correlated: if by measurement or some other process the potentiality of photon 1 to be polarized along the x-direction or along the y-direction is actualized, then the same will be true of photon 2, and conversely. Suppose now that photons 1 and 2 impinge respectively on the faces of birefringent crystal polarization analyzers I and II, with the entrance face of each analyzer perpendicular to z. Each analyzer has the property of separating light incident upon its face into two outgoing non-parallel rays, the ordinary ray and the extraordinary ray. The transmission axis of the analyzer is a direction with the property that a photon polarized along it will emerge in the ordinary ray (with certainty if the crystals are assumed to be ideal), while a photon polarized in a direction perpendicular to z and to the transmission axis will emerge in the extraordinary ray. See Figure 1:

Figure 1

(reprinted with permission)

Photon pairs are emitted from the source, each pair quantum mechanically described by |ő¶> of Eq. (17), and by a complete state m if a Local Realistic Theory is assumed. I and II are polarization analyzers, with outcome s=1 and t=1 designating emergence in the ordinary ray, while s = ‚ą'1 and t = ‚ą'1 designate emergence in the extraordinary ray.

The crystals are also idealized by assuming that no incident photon is absorbed, but each emerges in either the ordinary or the extraordinary ray. Quantum mechanics provides an algorithm for computing the probabilities that photons 1 and 2 will emerge from these idealized analyzers in specified rays, as functions of the orientations a and b of the analyzers, a being the angle between the transmission axis of analyzer I and an arbitrary fixed direction in the x-y plane, and b having the analogous meaning for analyzer II:

(18a) probő¶(s,t‚ÄČ|a,b‚ÄČ) = | <ő¶|őłs>1 |ŌÜt>2 |2 .

Here s is a quantum number associated with the ray into which photon 1 emerges, +1 indicating emergence in the ordinary ray and ‚ą'1 emergence in the extraordinary ray when a is given, while t is the analogous quantum number for photon 2 when b is given; and |őłs >1 |ŌÜt >2 is the ket representing the quantum state of photons 1 and 2 with the respective quantum numbers s and t. Calculation of the probabilities of interest from Eq. (18a) can be simplified by using the invariance noted after Eq. (17) and rewriting |ő¶ > as

(19) |ő¶> = (1/‚ąö2)[ |őł1>1 |őł1>2 + |őł‚ą'1>1 |őł‚ą'1>2 ].

Eq. (19) results from Eq. (17) by substituting the transmission axis of analyzer I for x and the direction perpendicular to both z and this transmission axis for y.

Since |őł‚ą'1>1 is orthogonal to |őł1>1, only the first term of Eq. (19) contributes to the inner product in Eq. (18a) if s=t=1; and since the inner product of | őł1 >1 with itself is unity because of normalization, Eq. (18a) reduces for s = t = 1 to

(18b) probő¶(1,1|a,b‚ÄČ) = (¬1/2)| 2<őł1|ŌÜ1>2 |2.

Finally, the expression on the right hand side of Eq. (18b) is evaluated by using the law of Malus, which is preserved in the quantum mechanical treatment of polarization states: that the probability for a photon polarized in a direction n to pass through an ideal polarization analyzer with axis of transmission n′ equals the squared cosine of the angle between n and n′. Hence

(20a) probő¶(1,1|a,b‚ÄČ) = (¬1/2)cos2ŌÉ,

where ŌÉ is b-a. Likewise,

(20b) probő¶(‚ą'1,‚ą'1|a,b ) = (¬1/2) cos2ŌÉ, and

(20c) probő¶(1,‚ą'1|a,b ) = probő¶(‚ą'1,1|a,b‚ÄČ) = (¬1/2)sin2ŌÉ.

The expectation value of the product of the results s and t of the polarization analyses of photons 1 and 2 by their respective analyzers is

(21) Eő¶(a,b‚ÄČ) = probő¶(1,1|a,b‚ÄČ) + probő¶(‚ą'1,‚ą'1|a,b‚ÄČ) ‚ą' probő¶(1,‚ą'1| a,b ) ‚ą' probő¶(‚ą'1,1|a,b‚ÄČ) = cos2ŌÉ ‚ą' sin2ŌÉ = cos2ŌÉ.

Now choose as the orientation angles of the transmission axes

(22) a = ŌÄ/4, a‚Ä≤ = 0, b = ŌÄ/8, b‚Ä≤ = 3 ŌÄ/8 .

Then

(23a) Eő¶(a,b‚ÄČ) = cos2(-ŌÄ/8) = 0.707,

(23b) Eő¶(a,b‚Ä≤) = cos2(–Ņ/8) = 0.707,

(23c) Eő¶(őĪ‚Ä≤,b‚ÄČ) = cos2(–Ņ/8) = 0.707,

(23d) Eő¶(a‚Ä≤,b‚Ä≤) = cos2(3–Ņ/8) = - 0.707.

Therefore

(24) Ső¶ ‚Č° Eő¶(a,b‚ÄČ) + Eő¶(a,b‚Ä≤) + Eő¶(a‚Ä≤,b‚ÄČ) ‚ą' Eő¶(a‚Ä≤,b‚Ä≤) = 2.828.

Eq. (24) shows that there are situations where the Quantum Mechanical calculations violate the BCHSH Inequality, thereby completing the proof of a version of Bell's Theorem. It is important to note, however, that all entangled quantum states yield predictions in violation of Ineq. (16), as Gisin (1991) and Popescu and Rohrlich (1992) have independently demonstrated. Popescu and Rohrlich (1992) also show that the maximum amount of violation is achieved with a quantum state of maximum degree of entanglement, exemplified by |ő¶ > of Eq. (17).

In Section 3 experimental tests of Bell's Inequality and their implications will be discussed. At this point, however, it is important to discuss the significance of Bell's Theorem from a purely theoretical standpoint. What Bell's Theorem shows is that Quantum Mechanics has a structure that is incompatible with the conceptual framework within which Bell's Inequality was demonstrated: a framework in which a composite system with two subsystems 1 and 2 is described by a complete state assigning a probability to each of the possible results of every joint experiment on 1 and 2, with the probability functions satisfying the two Independence Conditions (8a,b) and (9a,b), and furthermore allowing mixtures governed by arbitrary probability functions on the space of complete states. An ‚Äėexperiment‚Ä(tm) on a system can be understood to include the context within which a physical property of the system is measured, but the two Independence Conditions require the context to be local ‚Ä" that is, if a property of 1 is measured only properties of 1 co-measurable with it can be part of its context, and similarly for a property of 2. Therefore the incompatibility of Quantum Mechanics with this conceptual framework does not preclude the contextual hidden variables models proposed by Bell in (1966), an example of which is the de Broglie-Bohm model, but it does preclude models in which the contexts are required to be local. The most striking implication of Bell's Theorem is the light that it throws upon the EPR argument. That argument examines an entangled quantum state and shows that a necessary condition for avoiding action-at-a-distance between measurement outcomes of correlated properties of the two subsystems ‚Ä" e.g., position in both or linear momentum in both ‚Ä" is the ascription of ‚Äúelements of physical reality‚ÄĚ corresponding to the correlated properties to each subsystem without reference to the other. Bell's Theorem shows that such an ascription will have statistical implications in disagreement with those of quantum mechanics. A penetrating feature of Bell's analysis, when compared with that of EPR, is his examination of different properties in the two subsystems, such as linear polarizations along different directions in 1 and 2, rather than restricting his attention to correlations of identical properties in the two subsystems.

When Bell published his pioneering paper in 1964 he did not urge an experimental resolution of the conflict between Quantum Mechanics and Local Realistic Theories, probably because the former had been confirmed often and precisely in many branches of physics.

It was doubtful, however, that any of these many confirmations occurred in situations of conflict between Quantum Mechanics and Local Realistic Theories, and therefore a reliable experimental adjudication was desirable. A proposal to this effect was made by Clauser, Horne, Shimony, and Holt (1969), henceforth CHSH, who suggested that the pairs 1 and 2 be the photons produced in an atomic cascade from an initial atomic state with total angular momentum J = 0 to an intermediate atomic state with J = 1 to a final atomic state J = 0, as in an experiment performed with calcium vapor for other purposes by Kocher and Commins (1967). This arrangement has several advantages: first, by conservation of angular momentum the photon pair emitted in the cascade has total angular momentum 0, and if the photons are collected in cones of small aperture along the z and -z directions the total orbital angular momentum is small, with the consequence that the total spin (or polarization) angular momentum is close to 0 and therefore the polarizations of the two photons are tightly correlated; second, the photons are in the visible frequency range and hence susceptible to quite accurate polarization analysis with standard polarization analyzers; and third, the stochastic interval between the time of emission of the first photon and the time of emission of the second is in the range of 10 nsec., which is small compared to the average time between two productions of pairs, and therefore the associated photons 1 and 2 of a pair are almost unequivocally matched. A disadvantage of this arrangement, however, is that photo-detectors in the relevant frequency range are not very efficient ‚Ä" less than 20% efficency for single photons and hence less that 4% efficient for detection of a pair ‚Ä" and therefore an auxiliary assumption is needed in order to make inferences from the statistics of the subensemble of the pairs that is counted to the entire ensemble of pairs emitted during the period of observation. (This disadvantage causes a ‚Äúdetection loophole‚ÄĚ which prevents the experiment, and others like it, from being decisive, but procedures for blocking this loophole are at present being investigated actively and will be discussed in Section 4).

In the experiment proposed by CHSH the measurements are polarization analyses with the transmission axis of analyzer I oriented at angles a and a‚Ä≤, and the transmission axis of analyzer II oriented at angles b and b‚Ä≤. The results s = 1 and s = ‚ą'1 respectively designate passage and non-passage of photon 1 through analyzer I, and t = 1 and t = ‚ą'1 respectively designate passage and non-passage of photon 2 through analyzer II. Non-passage through the analyzer is thus substituted for passage into the extraordinary ray. This simplification of the apparatus causes an obvious problem: that it is impossible to discriminate directly between a photon that fails to pass through the analyzer and one which does pass through the analyzer but is not detected because of the inefficiency of the photo-detectors. CHSH dealt with this problem in two steps. First, they expressed the probabilities pŌĀ(s,t‚ÄČ|a, b‚ÄČ), where either s or t (or both) is ‚ą'1 as follows:

(25a) pŌĀ(1,‚ą'1|a,b‚ÄČ) = pŌĀ(1,1|a,‚ąě) ‚ą' pŌĀ(1,1|a,b‚ÄČ),

where ‚ąě replacing b designates the removal of the analyzer from the path of 2. Likewise

(25b) pŌĀ(‚ą'1,1|a,b‚ÄČ) = pŌĀ(1,1|‚ąě,b‚ÄČ) ‚ą' pŌĀ(1,1|a,b‚ÄČ),

where analyzer II is oriented at angle b and ‚ąě replacing a designates removal of the analyzer I from the path of photon 1; and finally

(25c) pŌĀ(‚ą'1,‚ą'1|a,b‚ÄČ) = 1 ‚ą' pŌĀ(1,1|a,b‚ÄČ) ‚ą' pŌĀ(1,‚ą'1|a,b‚ÄČ) ‚ą' pŌĀ(‚ą'1,1|a,b‚ÄČ) = 1 ‚ą' pŌĀ(1,1|a, ‚ąě) ‚ą' pŌĀ(1,1| ‚ąě,b‚ÄČ) + pŌĀ(1,1|a,b‚ÄČ),

Their second step is to make the ‚Äúfair sampling assumption‚ÄĚ: given that a pair of photons enters the pair of rays associated with passage through the polarization analyzers, the probability of their joint detection is independent of the orientation of the analyzers (including the quasi-orientation ‚ąě which designates removal). With this assumption, together with the assumptions that the local realistic expressions pŌĀ(s,t‚ÄČ|a, b‚ÄČ) correctly evaluate the probabilities of the results of polarization analyses of the photon pair (1,2), we can express these probabilities in terms of detection rates:

(26a) pŌĀ(1,1|a,b‚ÄČ) = D(a,b‚ÄČ)/D0

where D(a,b‚ÄČ) is the counting rate of pairs when the transmission axes of analyzers I and II are oriented respectively at angles a and b, and D0 is the detection rate when both analyzers are removed from the paths of photons 1 and 2;

(26b) pŌĀ(1,1|a,‚ąě) = D1(a,‚ąě)/D0,

where D1(a,‚ąě) is the counting rate of pairs when analyzer I is oriented at angle a while analyzer II is removed; and

(26c) pŌĀ(1,1|‚ąě,b‚ÄČ) = D2(‚ąě,b‚ÄČ)/D0,

where D2(‚ąě,b‚ÄČ) is the counting rate when analyzer II is oriented at angle b while analyzer I is removed. When Ineq. (16) is combined with Eq. (15b) and with Eqs (26a,b,c) relating probabilities to detection rates, the result is an inequality governing detection rates,

(27) ‚ą'1 ‚ȧ D(a,b‚ÄČ)/D0 + D(a,b‚Ä≤)/D0 + D(a‚Ä≤,b‚ÄČ)/D0 ‚ą' D(a‚Ä≤,b‚Ä≤)/D0 ‚ą' [D1(a)/D 0 + D2(b‚ÄČ)/D0 ] ‚ȧ 0.

(In (27), D1(a) is an abbreviation for D(a,‚ąě), and D2(b) is an abbreviation for D(‚ąě,b).)

If the following symmetry conditions are satisfied by the experiment:

(28) D(a,b‚ÄČ) = D(|b‚ą'a‚ÄČ|),

(29) D1(a‚ÄČ) = D1, independent of a;

(30) D2(b‚ÄČ) = D2, independent of b,

one can rewrite Ineq. (27) as

(31) ‚ą'1 ‚ȧ D(|b-a‚ÄČ|)/D0 + D(|b‚Ä≤‚ą'a|)/D0 + D(|b-a‚Ä≤|)/D0 ‚ą' D(|b‚Ä≤-a‚Ä≤|)/D0 ‚ą' [D1/D0 + D2/D0] ‚ȧ 0.

The first experimental test of Bell's Inequality, performed by Freedman and Clauser (1972), proceeded by making two applications of Ineq. (31), one to the angles a = ŌÄ/4, a‚Ä≤ = 0, b = ŌÄ/8, b‚Ä≤ = 3ŌÄ/8, yielding

(32a) ‚ą'1 ‚ȧ [3D(ŌÄ/8)/D0 ‚ą' D(3ŌÄ/8)/D0 ] ‚ą' D1/D0 ‚ą' D2/D0 ‚ȧ 0,

and another to the angles a = 3ŌÄ/4, a‚Ä≤ = 0, b = 3ŌÄ/8, b‚Ä≤ = 9ŌÄ/8, yielding

(32b) ‚ą'1 ‚ȧ [3D(3ŌÄ/8)/D0 ‚ą' D(ŌÄ/8)/D0 ] ‚ą' D1/D0 ‚ą' D2/D0 ‚ȧ 0.

Combining Ineq. (32a) and Ineq. (32b) yields

(33) -(¬1/4) ‚ȧ [D(–Ņ/8 )/D0 ‚ą' D(3–Ņ/8)/D0 ] ‚ȧ ¬1/4 .

The Quantum Mechanical prediction for this arrangement, taking into account the uncertainties about the polarization analyzers and the angle from the source subtended by the analyzers, is

(34) [D(–Ņ/8)/D0 ‚ą' D(3–Ņ/8)/D0 ]QM = (0.401+/-0.005) ‚ą' (0.100+/-0.005) = 0.301+/-0.007,

The experimental result obtained by Freedman and Clauser was

(35) [D(–Ņ/8)/D0 ‚ą' D(3–Ņ/8)/D0 ]expt = 0.300 +/- 0.008,

which is 6.5 sd from the limit allowed by Ineq. (33) but in good agreement with the quantum mechanical prediction Eq. (34). This was a difficult experiment, requiring 200 hours of running time, much longer than in most later tests of Bell's Inequality, which were able to use lasers for exciting the sources of photon pairs.

Several dozen experiments have been performed to test Bell's Inequalities. References will now be given to some of the most noteworthy of these, along with references to survey articles which provide information about others. A discussion of those actual or proposed experiments which were designed to close two serious loopholes in the early Bell experiments, the ‚Äúdetection loophole‚ÄĚ and the ‚Äúcommunication loophole‚ÄĚ, will be reserved for Section 4 and Section 5.

Holt and Pipkin completed in 1973 (Holt 1973) an experiment very much like that of Freedman and Clauser, but examining photon pairs produced in the 91P1 ‚Ü'73S1‚Ü'63P 0 cascade in the zero nuclear-spin isotope of mercury-198 after using electron bombardment to pump the atoms to the first state in this cascade. The result was contrary to that of Freedman and Clauser: fairly good agreement with Ineq. (33), which is a consequence of the BCHSH Inequality, and a disagreement with the quantum mechanical prediction by nearly 4 sd. Because of the discrepancy between these two early experiments Clauser (1976) repeated the Holt-Pipkin experiment, using the same cascade and excitation method but a different spin-0 isotope of mercury, and his results agreed well with the quantum mechanical predictions but violated the consequence of Bell's Inequality. Clauser also suggested a possible explanation for the anomalous result of Holt-Pipkin: that the glass of the Pyrex bulb containing the mercury vapor was under stress and hence was optically active, thereby giving rise to erroneous determinations of the polarizations of the cascade photons.

Fry and Thompson (1976) also performed a variant of the Holt-Pipkin experiment, using a different isotope of mercury and a different cascade and exciting the atoms by radiation from a narrow-bandwith tunable dye laser. Their results also agreed well with the quantum mechanical predictions and disagreed sharply with Bell's Inequality. They gathered data in only 80 minutes, as a result of the high excitation rate achieved by the laser.

Four experiments in the 1970s ‚Ä" by Kasday-Ullman-Wu, Faraci-Gutkowski-Notarigo-Pennisi, Wilson-Lowe-Butt, and Bruno-d‚Ä(tm)Agostino-Maroni used photon pairs produced in positronium annihilation instead of cascade photons. Of these, all but that of Faraci et al. gave results in good agreement with the quantum mechanical predictions and in disagreement with Bell's Inequalities. A discussion of these experiments is given in the review article by Clauser and Shimony (1978), who regard them as less convincing than those using cascade photons, because they rely upon stronger auxiliary assumptions.

The first experiment using polarization analyzers with two exit channels, thus realizing the theoretical scheme in the third step of the argument for Bell's Theorem in Section 2, was performed in the early 1980s with cascade photons from laser-excited calcium atoms by Aspect, Grangier, and Roger (1982). The outcome confirmed the predictions of quantum mechanics over those of local realistic theories more dramatically than any of its predecessors ‚Ä" with the experimental result deviating from the upper limit in a Bell's Inequality by 40 sd. An experiment soon afterwards by Aspect, Dalibard, and Roger (1982), which aimed at closing the communication loophole, will be discussed in Section 5. The historical article by Aspect (1992) reviews these experiments and also surveys experiments performed by Shih and Alley, by Ou and Mandel, by Rarity and Tapster, and by others, using photon pairs with correlated linear momenta produced by down-conversion in non-linear crystals. Some even more recent Bell tests are reported in an article on experiments and the foundations of quantum physics by Zeilinger (1999).

Pairs of photons have been the most common physical systems in Bell tests because they are relatively easy to produce and analyze, but there have been experiments using other systems. Lamehi-Rachti and Mittig (1976) measured spin correlations in proton pairs prepared by low-energy scattering. Their results agreed well with the quantum mechanical prediction and violated Bell's Inequality, but strong auxiliary assumptions had to be made like those in the positronium annihilation experiments. In 2003 a Bell test was performed at CERN by A. Go (Go 2003) with B-mesons, and again the results favored the quantum mechanical predictions.

The outcomes of the Bell tests provide dramatic confirmations of the prima facie entanglement of many quantum states of systems consisting of 2 or more constituents, and hence of the existence of holism in physics at a fundamental level. Actually, the first confirmation of entanglement and holism antedated Bell's work, since Bohm and Aharonov (1957) demonstrated that the results of Wu and Shaknov (1950), Compton scattering of the photon pairs produced in positronium annihilation, already showed the entanglement of the photon pairs.

The summary in Section 3 of the pioneering experiment by Freedman and Clauser noted that their symmetry assumptions, Eqs. (28), (29), and (30), are susceptible to experimental check, and furthermore could have been dispensed with by measuring the detection rates with additional orientations of the analyzers. The fair sampling assumption, on the other hand, is essential in all the optical Bell tests performed so far for linking the results of polarization or direction analysis, which are not directly observable, with detection rates, which are observable. The absence of an experimental confirmation of the fair sampling assumption, together with the difficulty of testing Bell's Inequality without this assumption or another one equally remote from confirmation is known as the ‚Äúdetection loophole‚ÄĚ in the refutation of Local Realistic Theories, and is the source of skepticism about the definitiveness of the experiments.

The seriousness of the detection loophole was increased by a model of Clauser and Horne (CH) (1974), in which the rates at which the photon pairs pass through the polarization analyzers with various orientations are consistent with an Inequality of Bell's type, but the hidden variables provide instructions to the photons and the apparatus not only regarding passage through the analyzers but also regarding detection, thereby violating the fair sampling assumption. Detection or non-detection is selective in the model in such a way that the detection rates violate the Bell-type Inequality and agree with the quantum mechanical predictions. Other models were constructed later by Fine (1982a) and corrected by Maudlin (1994) (the ‚ÄúPrism Model‚ÄĚ) and by C.H.Thompson (1996) (the ‚ÄúChaotic Ball model‚ÄĚ). Although all these models are ad hoc and lack physical plausibility, they constitute existence proofs that Local Realistic Theories can be consistent with the quantum mechanical predictions provided that the detectors are properly selective. The detection loophole could in principle be blocked by a test of the BCHSH Inequality provided that the detectors for the 1 and 2 particles were sufficiently efficient and that there were a reliable way of determining how many pairs impinge upon the analyzer-detector assemblies. The first of these conditions can very likely be fulfilled if atoms from the dissociation of dimers are used as the particle pairs, as in the proposed experiment of Fry and Walther (Fry & Walther 1997, 2002, Walther & Fry 1997), to be discussed below, but the second condition does not at present seem feasible. Consequently a different strategy is needed.

Tools which are promising for blocking the detection loophole are two Inequalities derived by CH (Clauser & Horne 1974), henceforth called BCH Inequalities. Both differ from the BCHSH Inequality of Section 2 by involving ratios of probabilities. The two BCH Inequalities differ from each other in two respects: the first involves not only the probabilities of coincident counting of the 1 and 2 systems but also probabilities of single counting of 1 and 2 without reference to the other, and it makes no auxiliary assumption regarding the dependence of detection upon the placement of the analyzer; the second involves only probabilities of coincident detection and uses a weak, but still non-trivial, auxiliary assumption called ‚Äúno enhancement.‚ÄĚ

The experiment proposed by Fry and Walther intends to make use of the first BCH Inequality, dispensing with auxiliary assumptions, but the second BCH Inequality will also be reviewed here, because of its indispensability in case the optimism of Fry and Walther regarding the achievability of certain desirable experimental conditions turns out to be disappointed.

The conceptual framework of both BCH Inequalities takes an analyzer/detector assembly as a unit, instead of considering the separate operation of two parts, and it places only one analyzer/detector assembly in the 1 arm and one in the 2 arm of the experiment; in other words, for both analyzers I and II the two exit channels are detection and non-detection. Let N be the number of pairs of systems impinging on the two analyzer/detector assemblies, N1(a) the number detected by 1's analyzer/detector assembly, N2(b) the number detected by 2's analyzer/detector assembly, and N12(a,b‚ÄČ) the number of pairs detected by both, where a and b are the respective settings of the analyzers. Let N1 (m,a‚ÄČ), N2(m,b‚ÄČ), and N12(m,a,b‚ÄČ) be the corresponding quantities predicted by the Local Realistic Theory with complete state m. Then the respective probabilities of detection by the analyzer/detector assemblies for 1 and 2 separately and by both together predicted by the local realistic theory with complete state m are

(36a) p1(m,a‚ÄČ) = N1(m,a‚ÄČ)/N,

(36b) p2(m,b‚ÄČ) = N2(m,b‚ÄČ)/N

(36c) p(m,a,b‚ÄČ) = N12(m,a,b‚ÄČ)/N.

Note that the total number N of pairs appears in the denominators on the right hand side, but the difficulty of determining this quantity experimentally is circumvented by CH, who derive an inequality concerning the ratios of the probabilities in Eqs. (36a,b,c), so that the denominator N cancels. The analogues of the Independence Conditions of (8a,b) and (9a,b) are taken as part of the conceptual framework of a Local Realistic Theory, which implies the factorization of p(m,a,b‚ÄČ):

(37) p(m,a,b‚ÄČ) = p1(m,a‚ÄČ)p2( m,b‚ÄČ).

CH then prove a lemma similar to the lemma in Section 2 : if q,q′ are real numbers such that q and q′ fall in the closed interval [0,X], and r,r′,are real numbers such that r and r′ fall in the closed interval [0,Y], then

(38) ‚ą'1 ‚ȧ qr + qr‚Ä≤ + q‚Ä≤r ‚ą' q‚Ä≤r‚Ä≤ - qY ‚ą' rX ‚ȧ 0.

Then making the substitution

(39) q = p1(m,a‚ÄČ), q‚Ä≤ = p1(m,a‚Ä≤), r = p2(m,b‚ÄČ), r‚Ä≤ = p2 (m,b‚Ä≤),

and using Eq. (37) we have

(40) ‚ą'1 ‚ȧ p(m,a,b‚ÄČ) + p(m,a,b‚Ä≤) + p(m,a‚Ä≤,b‚ÄČ) - p(m,a‚Ä≤,b‚Ä≤) ‚ą' p1(m,a‚ÄČ)Y ‚ą' p2(m,b‚ÄČ)X ‚ȧ 0.

When the Local Realistic Theory describes an ensemble of pairs by a probability distribution ŌĀ over the space of complete states M then the probabilities corresponding to those of (36a,b,c) are

(41a) pŌĀ1(a) = ‚ą"M p1(m,a‚ÄČ)dŌĀ,

(41b) pŌĀ2(b‚ÄČ) = ‚ą"M p2(m,b‚ÄČ)dŌĀ,

(41c) pŌĀ(a,b‚ÄČ) = ‚ą"M p(m,a,b‚ÄČ)dŌĀ,

and when X and Y are taken to be 1, as they certainly can be from general properties of probability distributions, then

(42) ‚ą'1 ‚ȧ pŌĀ(a,b‚ÄČ) + pŌĀ (a,b‚Ä≤) + pŌĀ(a‚Ä≤,b‚ÄČ) - pŌĀ(a‚Ä≤,b‚Ä≤) - pŌĀ1(a‚ÄČ) - pŌĀ2(b‚ÄČ) ‚ȧ 0 ,

Since CH are seeking an Inequality involving only the ratios of probabilities they disregard the lower limit and rewrite the right hand Inequality as

(43) [pŌĀ(a,b‚ÄČ) + pŌĀ(a,b‚Ä≤) + pŌĀ(a‚Ä≤,b‚ÄČ) - pŌĀ(a‚Ä≤,b‚Ä≤)]/[p ŌĀ1(a‚ÄČ) + pŌĀ2(b‚ÄČ)] ‚ȧ 1.

This is the first BCH Inequality. In principle this Inequality could be used to adjudicate between the family of Local Realistic Theories and Quantum Mechanics, provided that the detectors are sufficiently efficient and also provided that the single detection counts are not spoiled by counting systems that do not belong to the pairs in the ensemble of interest. In experiments using photon pairs from a cascade, as most of the early Bell tests were, it can happen that the second transition occurs without the first step in the cascade, thus producing a single photon without a partner. To cope with this difficulty CH make the ‚Äúno enhancement assumption‚ÄĚ, which is considerably weaker than the ‚Äúfair sampling‚ÄĚ assumption used in Section 3 : that if an analyzer is removed from the path of either 1 or 2 ‚Ä" an operation designated symbolically by letting ‚ąě replace the parameter a or b of the respective analyzer ‚Ä" the resulting probability of detection is at least as great as when a finite parameter is used, i.e.,

(44a) p1(m,a‚ÄČ) ‚ȧ p1(m,‚ąě),

(44b) p2(m,b‚ÄČ) ‚ȧ p2(m,‚ąě)

Now let the right hand side of (44a) be X and the right hand side of (44b) be Y and insert these values into Inequality (40), and furthermore use Eq. (37) twice to obtain

(45) ‚ą'1 ‚ȧ p(m,a,b‚ÄČ) + p(m,a,b‚Ä≤) + p(m,a‚Ä≤,b‚ÄČ) ‚ą' p(m,a‚Ä≤,b‚Ä≤) ‚ą' p1(m,a,‚ąě) ‚ą' p2(m,‚ąě,b‚ÄČ) ‚ȧ 0.

Integrate Inequality (45) using the distribution ŌĀ and then retain only the right hand Inequality in order to obtain an expression involving ratios only of probabilities of joint detections:

(46) [pŌĀ(a,b‚ÄČ) + pŌĀ(a,b‚Ä≤) + pŌĀ(a‚Ä≤,b‚ÄČ) ‚ą' pŌĀ(a‚Ä≤,b‚Ä≤)] / [pŌĀ(a,‚ąě) + p ŌĀ(‚ąě,b‚ÄČ)] ‚ȧ 1

This is the second BCH Inequality.

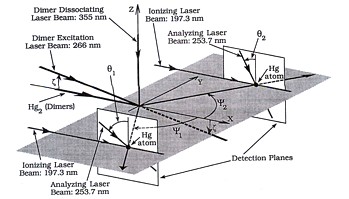

In the experiment initiated by Fry and Walther (1997), but not yet complete, dimers of 199Hg are generated in a supersonic jet expansion and then photo-dissociated by two photons from appropriate laser beams. Each 199Hg atom has nuclear spin ¬1/2, and the total spin F (electronic plus nuclear) of the dimer is 0 because of the symmetry rules for the total wave function of a homonuclear diatomic molecule consisting of two fermions. (The argument for this conclusion is fairly intricate but well presented in Walther and Fry 1997). Because the dissociation is a two-body process, momentum conservation guarantees that the directions of the two atoms after dissociation are strictly correlated, so that when the two analyzer/detector assemblies are optimally placed the entrance of one 199Hg atom into its analyzer/detector will almost certainly be accompanied by the entrance of the other atom into its assembly; the primary reason for the occasional failure of this coordination is the non-zero volume of the region in which the dissociation occurs. Since each of the 199Hg atoms (with 80 electrons) is in the electronic ground state it will have zero electronic spin, and therefore the total spin F of each atom (which then equals its nuclear spin ¬1/2) will be F = ¬1/2. Given any choice of an axis, the only possible values of the magnetic quantum number FM relative to this axis are FM=¬1/2 and -¬1/2. The directions of this axis, őł1 for atom 1 and őł2 for atom 2, are the quantities to be used for the parameters a and b in the BCH Inequalities. The angles őł1 and őł2 are physically fixed by the directions of two left-circularly polarized 253.7 nm laser beams propagating in parallel planes each perpendicular to the plane in which the atoms 1 and 2 travel from the dissociation region. See Figure 2:

Figure 2

(reprinted with permission)

This is a schematic of the experiment showing the direction of the mercury dimer beam, together with a pair of the dissociated atoms and their respective detection planes. The relative directions of the various laser beams are also shown.

The left-circular polarization ensures that if a photon is absorbed by atom 1 then FM of atom 1 will decrease by one, which is possible only if FM is initially ¬1/2, and likewise for absorption by atom 2. Thus each laser beam is selective and acts as an analyzer by taking only an FM=¬1/2 atom, 1 or 2 as the case may be, into a specific excited state. Detection is achieved in several steps. The first step is the impingement on atom 1 of a 197.3 nm laser beam, timed to arrive within the lifetime of the excited state produced by the 253.7 nm laser beam; absorption of a photon from this beam by 1 will cause ionization (and likewise an excited 2 is ionized). The second step is the detection of either the resulting ion or the associated photoelectron or both ‚Ä" the detection being a highly efficient process, since both of these products of ionization are charged. The first BCH Inequality predicts that the coincident detection rates and the single detection rates in this experiment satisfy

(47) [ D(őł1, őł2) + D(őł1, őł‚Ä≤2) + D(őł‚Ä≤1, őł2) - D(őł‚Ä≤1, őł‚Ä≤2)] / [D1(őł1) + D2(őł2)] ‚ȧ 1.

Fry and Walther calculate that with proper choices of the four angles, large enough values of detector efficiencies, large enough probability of 1 entering the region of analysis, large enough probability of 2 entering its region of analysis conditional upon 1 doing so, and small enough probability of mistaken analysis (e.g., mistaking an FM = -¬1/2 for an FM = ¬1/2 because of rare processes) the quantum mechanical predictions will violate (47). If these predictions are fulfilled, no auxiliary assumption like ‚Äúno enhancement‚ÄĚ will be needed to disprove Inequality (43) experimentally. The detection loophole in the refutation of the family of Local Realistic Theories will thereby be closed. Fry and Walther express some warning, however, against excessive optimism about detecting a sufficiently large percentage of the ions and the electrons produced by the ionization of the Hg atoms, together with a low rate of errors due to background or noise counts: ‚Äúthe Hg partial pressure, as well as the partial pressure of all other residual gases, must be kept as low as possible. An ultra high vacuum of better than 10-9 Torr is required and the detector must be cooled to liquid nitrogen temperatures to freeze out background Hg atoms‚Ķ Equally important is the suppression of photoelectrons produced by scattered photons.‚ÄĚ (Fry & Walther 1997, p. 67). If these desiderata are not achieved, it would be necessary to resort to BCH's second Inequality, which required the ‚ÄĚno enhancement‚ÄĚ assumption.

The derivations of all the variants of Bell's Inequality depend upon the two Independence Conditions (8a,b) and (9a,b). Experimental data that disagree with a Bell's Inequality are not a refutation unless these Conditions are satisfied by the experimental arrangement. In the early tests of Bell's Inequalities it was plausible that these Conditions were satisfied just because the 1 and the 2 arms of experiment were spatially well separated in the laboratory frame of reference. This satisfaction, however, is a mere contingency not guaranteed by any law of physics, and hence it is physically possible that the setting of the analyzer of 1 and its detection or non-detection could influence the outcome of analysis and the detection or non-detection of 2, and conversely. This is the ‚Äúcommunication loophole‚ÄĚ in the early Bell tests. If the process of analysis and detection of 1 were an event with space-like separation from the event consisting of the analysis and detection of 2 then the satisfaction of the Independence Conditions would be a consequence of the Special Theory of Relativity, according to which no causal influences can propagate with a velocity greater than the velocity of light in vacuo. Several experiments of increasing sophistication between 1982 and the present have attempted to block the Communication Loophole in this way.

Aspect, Dalibard, and Roger (1982) published the results of an experiment in which the choices of the orientations of the analyzers of photons 1 and 2 were performed so rapidly that they were events with space-like separation. No physical modification was made of the analyzers themselves. Instead, switches consisting of vials of water in which standing waves were excited ultrasonically were placed in the paths of the photons 1 and 2. When the wave is switched off, the photon propagates in the zeroth order of diffraction to polarization analyzers respectively oriented at angles a and b, and when it is switched on the photons propagate in the first order of diffraction to polarization analyzers respectively oriented at angles a′ and b′. The complete choices of orientation require time intervals 6.7 ns and 13.37 ns respectively, much smaller than the 43 ns required for a signal to travel between the switches in obedience to Special Relativity Theory. Prima facie it is reasonable that the Independence Conditions are satisfied, and therefore that the coincidence counting rates agreeing with the quantum mechanical predictions constitute a refutation of Bell's Inequality and hence of the family of Local Realistic Theories. There are, however, several imperfections in the experiment. First of all, the choices of orientations of the analyzers are not random, but are governed by quasiperiodic establishment and removal of the standing acoustical waves in each switch. A scenario can be invented according to which the clever hidden variables of each analyzer can inductively infer the choice made by the switch controlling the other analyzer and adjust accordingly its decision to transmit or to block an incident photon. Also coincident count technology is employed for detecting joint transmission of 1 and 2 through their respective analyzers, and this technology establishes an electronic link which could influence detection rates. And because of the finite size of the apertures of the switches there is a spread of the angles of incidence about the Bragg angles, resulting in a loss of control of the directions of a non-negligible percentage of the outgoing photons.

The experiment of Tittel, Brendel, Zbinden, and Gisin (1998) did not directly address the communication loophole but threw some light indirectly on this question and also provided the most dramatic evidence so far concerning the maintenance of entanglement between particles of a pair that are well separated. Pairs of photons were generated in Geneva and transmitted via cables with very small probability per unit length of losing the photons to two analyzing stations in suburbs of Geneva, located 10.9 kilometers apart on a great circle. The counting rates agreed well with the predictions of Quantum Mechanics and violated one of Bell's Inequalities. No precautions were taken to ensure that the choices of orientations of the two analyzers were events with space-like separation. The great distance between the two analyzing stations makes it difficult to conceive a plausible scenario for a conspiracy that would violate Bell's Independence Conditions. Furthermore ‚Ä" and this is the feature which seems most to have captured the imagination of physicists ‚Ä" this experiment achieved much greater separation of the analyzers than ever before, thereby providing the best reply to date to a conjecture by Schr√∂dinger (1935) that entanglement is a property that may dwindle with spatial separation.

The experiment that comes closest so far to closing the Communication Loophole is that of Weihs, Jennenwein, Simon, Weinfurter, and Zeilinger (1998). The pairs of systems used to test a Bell's Inequality are photon pairs in the entangled polarization state

(48) |ő®> = 1/‚ąö2 (|H>1 |V>2 ‚ą' |V>1 |H>2),

where the ket |H> represents horizontal polarization and |V> represents vertical polarization. Each photon pair is produced from a photon of a laser beam by the down-conversion process in a nonlinear crystal. The momenta, and therefore the directions, of the daughter photons are strictly correlated, which ensures that a non-negligible proportion of the pairs jointly enter the apertures (very small) of two optical fibers, as was also achieved in the experiment of Tittel et al. The two stations to which the photon pairs are delivered are 400 m apart, a distance which light in vacuo traverses in 1.3 ő1/4s. Each photon emerging from an optical fiber enters a fixed two-channel polarizer (i.e., its exit channels are the ordinary ray and the extraordinary ray). Upstream from each polarizer is an electro-optic modulator, which causes a rotation of the polarization of a traversing photon by an angle proportional to the voltage applied to the modulator. Each modulator is controlled by amplification from a very rapid generator, which randomly causes one of two rotations of the polarization of the traversing photon. An essential feature of the experimental arrangement is that the generators applied to photons 1 and 2 are electronically independent. The rotations of the polarizations of 1 and 2 are effectively the same as randomly and rapidly rotating the polarizer entered by 1 between two possible orientations a and a‚Ä≤ and the polarizer entered by 2 between two possible orientations b and b‚Ä≤. The output from each of the two exit channels of each polarizer goes to a separate detector, and a ‚Äútime tag‚ÄĚ is attached to each detected photon by means of an atomic clock. Coincidence counting is done after all the detections are collected by comparing the time tags and retaining for the experimental statistics only those pairs whose tags are sufficiently close to each other to indicate a common origin in a single down-conversion process. Accidental coincidences will also enter, but these are calculated to be relatively infrequent. This procedure of coincidence counting eliminates the electronic connection between the detector of 1 and the detector of 2 while detection is taking place, which conceivably could cause an error-generating transfer of information between the two stations. The total time for all the electronic and optical processes in the path of each photon, including the random generator, the electro-optic modulator, and the detector, is conservatively calculated to be smaller than 100 ns, which is much less than the 1.3 ő1/4s required for a light signal between the two stations. With the choice made in Eq. (22) of the angles a, a‚Ä≤, b, and b‚Ä≤ and with imperfections in the detectors taken into account, the Quantum Mechanical prediction is

(49) SŌą ‚Č° EŌą(a,b‚ÄČ) + EŌą(a,b‚Ä≤) + EŌą(a‚Ä≤,b‚ÄČ) ‚ą' EŌą(a‚Ä≤,b‚Ä≤) = 2.82,

which is 0.82 greater than the upper limit allowed by the BCHSH Ineq. (16). The experimental result in the experiment of Weihs et al. is 2.73 +/- 0.02, in good agreement with the Quantum Mechanical prediction of Eq. (49), and it is 30 sd away from the upper limit of Ineq. (16). Aspect, who designed the first experimental test of a Bell Inequality with rapidly switched analyzers (Aspect, Dalibard, Roger 1982) appreciatively summarized the import of this result:

I suggest we take the point of view of an external observer, who collects the data from the two distant stations at the end of the experiment, and compares the two series of results. This is what the Innsbruck team has done. Looking at the data a posteriori, they found that the correlation immediately changed as soon as one of the polarizers was switched, without any delay allowing for signal propagation: this reflects quantum non-separability. (Aspect 1999)

The experiment of Weihs et al. does not completely block the detection loophole, and even if the experiment proposed by Fry and Walther is successfully completed, it will still be the case that the detection loophole and the communication loophole will have been blocked in two different experiments. It is therefore conceivable ‚Ä" though with difficulty, in the subjective judgment of the present writer ‚Ä" that both experiments are erroneous, because Nature took advantage of a separate loophole in each case. For this reason Fry and Walther suggest that their experiment using dissociated mercury dimers can in principle be refined by using electro-optic modulators (EOM), so as to block both loopholes: ‚ÄúSpecifically, the EOM together with a beam splitting polarizer can, in a couple of nanoseconds, change the propagation direction of the excitation laser beam and hence the component of nuclear spin angular momentum being observed. A separation between our detectors of approximately 12 m will be necessary in order to close the locality loophole‚ÄĚ (Fry & Walther 2002) [See Fig. 2 and also note that ‚Äúlocality loophole‚ÄĚ is their term for the communication loophole.]

In the face of the spectacular experimental achievement of Weihs et al. and the anticipated result of the experiment of Fry and Walther there is little that a determined advocate of local realistic theories can say except that, despite the spacelike separation of the analysis-detection events involving particles 1 and 2, the backward light-cones of these two events overlap, and it is conceivable that some controlling factor in the overlap region is responsible for a conspiracy affecting their outcomes. There is so little physical detail in this supposition that a discussion of it is best delayed until a methodological discussion in Section 7.

This section will discuss in some detail two variants of Bell's Theorem which depart in some respect from the conceptual framework presented in Section 2. Both are less general than the version in Section 2, because they apply only to a deterministic local realistic theory ‚Ä" that is a theory in which a complete state m assigns only probabilities 1 or 0 (‚Äėyes‚Ä(tm) or ‚Äėno‚Ä(tm)) to the outcomes of the experimental tests performed on the systems of interest. By contrast, the Local Realistic Theories studied in Section 2 are allowed to be stochastic, in the sense that a complete state can assign other probabilities between 0 and 1 to the possible outcomes. At the end of the section two other variants will be mentioned briefly but not summarized in detail.

The first variant is due independently to Kochen,[3] Heywood and Redhead (1983) and Stairs (1983). Its ensemble of interest consists pairs of spin-1 particles in the entangled state

(50) |ő¶> = 1/‚ąö3 [ |z,1>1 |z,‚ą'1>2 - |z,0>1 |z,0>2 + |z,‚ą'1>1 |z,1>2 ],

where |z,i>1, with i = ‚ą'1 or 0 or 1 is the spin state of particle 1 with component of spin i along the axis z , and |z,i>2 has an analogous meaning for particle 2. If x,y,z is a triple of orthogonal axes in 3-space then the components sx, sy, sz of the spin operator along these axes do not pairwise commute; but it is a peculiarity of the spin-1 system that the squares of these operators ‚Ä" sx2, sy2, sz2 ‚Ä" do commute, and therefore, in view of the considerations of Section 1, any two of them can constitute a context in the measurement of the third. If the operator of interest is sz2, the axes x and y can be any pair of orthogonal axes in the plane perpendicular to z, thus offering an infinite family of contexts for the measurement of sz2. As noted in Section 1 Bell exhibited the possibility of a contextual hidden variables theory for a quantum system whose Hilbert space has dimension 3 or greater even though the Bell-Kochen-Specker theorem showed the impossibility of a non-contextual hidden variables theory for such a system. The strategy of Kochen and of Heywood-Redhead is to use the entangled state of Eq. (50) to predict the outcome of measuring sz2 for particle 2 (for any choice of z‚ÄČ) by measuring its counterpart on particle 1. A specific complete state m would determine whether sz2 of 1, measured together with a context in 1, is 0 or 1. Agreement with the quantum mechanical prediction of the entangled state of Eq. (50) implies that sz2 of 2 has the same value 0 or 1. But if the Locality Conditions (8a,b) and (9a,b) are assumed, then the result of measuring sz2 on 2 must be independent of the remote context, that is, independent of the choice of sx2 and sy2 of 1, hence of 2 because of correlation, for any pair of orthogonal directions x and y in the plane perpendicular to z. It follows that the Local Realistic Theory which supplies the complete state m is not contextual after all, but maps the set of operators sz2 of 2, for any direction z, noncontextually into the pair of values (0, 1). But that is impossible in view of the Bell-Kochen-Specker theorem. The conclusion is that no deterministic Local Realistic Theory is consistent with the Quantum Mechanical predictions of the entangled state (50). An alternative proof is thus provided for an important special case of Bell's theorem, which was the case dealt with in Bell's pioneering paper of 1964: that no deterministic local realistic theory can agree with all the predictions of quantum mechanics. An objection may be raised that sz2 of 1 is in fact measured together with only a single context ‚Ä" e.g., sx2 and sy2 ‚Ä" while other contexts are not measured, and ‚Äúunperformed experiments have no results‚ÄĚ (a famous remark of Peres 1978). It may be that this remark is a correct epitome of the Copenhagen interpretation of quantum mechanics, but it certainly is not a valid statement in a deterministic version of a Local Realistic interpretation of Quantum Mechanics, because a deterministic complete state is just what is needed as the ground for a valid counterfactual conditional. We have good evidence to this effect in classical physics: for example, the charge of a particle, which is a quantity inferred from the actual acceleration of the particle when it is subjected to an actual electric field, provides in conjunction with a well-confirmed force law the basis for a counterfactual proposition about the acceleration of the particle if it were subjected to an electric field different from the actual one.

A simpler proof of Bell's Theorem, also relying upon counterfactual reasoning and based upon a deterministic local realistic theory, is that of Hardy (1993), here presented in Lalo√"'s (2001) formulation. Consider an ensemble of pairs 1 and 2 of spin-¬1/2 particles, the spin of 1 measured along directions in the xz-plane making angles a=őł/2 and a‚Ä≤=0 with the z-axis, and angles b and b‚Ä≤ having analogous significance for 2. The quantum states for particle 1 with spins +¬1/2 and ‚ą'¬1/2 relative to direction a‚Ä≤ are respectively |a‚Ä≤,+>1 and |a‚Ä≤,‚ą'>1, and relative to direction a are respectively

(51a) |a,+>1 = cosőł|a‚Ä≤,+>1 + sinőł|a‚Ä≤,‚ą'>1

and

(51b) |a,‚ą'>1 = -sinőł|a‚Ä≤,+>1 + cosőł|a‚Ä≤,‚ą'>1;

the spin states for 2 are analogous. The ensemble of interest is prepared in the entangled quantum state

(52) |ő®> = -cosőł|a‚Ä≤,+>1 |b‚Ä≤,‚ą'>2 ‚ą' cosőł|a‚Ä≤,‚ą'>1 |b‚Ä≤,+>2 + sinőł|a‚Ä≤,+>1 |b‚Ä≤,+>2

(unnormalized, because normalization is not needed for the following argument). Then for the specified a, a′, b, and b′ the following quantum mechanical predictions hold:

(53) <ő®|a,+>1 |b‚Ä≤,+>2 = 0;

(54) <ő®|a‚Ä≤,+>1 |b,+>2 = 0 ;

(55) <ő®|a‚Ä≤,‚ą'>1 |b‚Ä≤,‚ą'>2 = 0;

and for almost all values of the őł of Eq. (52)

(56) <ő®|a,+>1 |b,+>2 ‚Č 0 ,

with the maximum occurring around őł = 9o. Inequality (56) asserts that for the specified angles there is a non-empty subensemble E‚Ä≤ of pairs for which the results for a spin measurement along a for 1 and along b for 2 are both +. Eq. (53) implies the counterfactual proposition that if the spin of a 2 in E‚Ä≤ had been measured along b‚Ä≤ then with certainty the result would have been -; and likewise Eq. (54) implies the counterfactual proposition that if the spin of a 1 in E‚Ä≤ had been measured along a‚Ä≤ then with certainty the result would have been -. It is in this step that counterfactual reasoning is used in the argument, and, as in the argument of Kochen-Heywood-Redhead-Stairs in the previous paragraph, the reasoning is based upon the deterministic Local Realistic Theory. Since the subensemble E‚Ä≤ is non-empty, we have reached a contradiction with Eq. (55), which asserts that if the spin of 1 is measured along a‚Ä≤ and that of 2 is measured along b‚Ä≤ then it is impossible that both results are -. The incompatibility of a deterministic Local Realistic Theory with Quantum Mechanics is thereby demonstrated.

An attempt was made by Stapp (1997) to demonstrate a strengthened version of Bell's theorem which dispenses with the conceptual framework of a Local Realistic Theory and to use instead the logic of counterfactual conditionals. His intricate argument has been the subject of a criticism by Shimony and Stein (2001, 2003), who are critical of certain counterfactual conditionals that are asserted by Stapp by means of a ‚Äúpossible worlds‚ÄĚ analysis without a grounding on a deterministic Local Realistic Theory, and a response by Stapp (2001) himself, who defends his argument with some modifications.